☎️ AWHL x Chalmers ☎️

The Assaulted Women’s Helpline (AWHL) is a domestic violence service that counsels and offers support to survivors. They partnered with Ample to build a support pipeline.

The Problem Statement: Women fleeing violence have no access to domestic violence resources or counselors through Chalmers.

How might we build an ethical interaction to service those users?

MY ROLE

Lead UX/UI Designer

UX Researcher

Prototyper

TIMELINE

3 months (Shipped May ‘21)

COMPANY

Ample Labs

TEAM

1 Product Manager

1 Lead Engineer

1 Design Systems Designer

1 Trauma Researcher

The design question:

How might we build an interaction to connect survivors with AWHL while also ethically collecting data on this user group?

👇🏾👇🏾👇🏾

Investors in Ample really love utterances because they provide nuanced user data

Users love slots because they allow them to discreetly find services

Whenever Ample Labs has tried to collect sensitive user data like emails and phone numbers, there has been a dramatic drop off from the platform!

This new interaction would decrease that drop-off issue and encourage users to use utterances to find AWHL.

I led a discovery session with our stakeholders (AWHL hotline) to figure out their priorities and concerns with building abuse categories.

I then matched those directly with the features we offer with Chalmers.

👇🏾👇🏾👇🏾

AWHL Insights 👀

-Women who call into the helpline are unsure about the type of abuse they are experiencing

-AWHL wants to reach a younger audience of women who may be afraid to call in initially

-Call time pre-COVID was 30-40 minutes on average but are now being cut significantly shorter because women are working from home and do not want their partners to hear

-Women usually open new tabs when trying to hide their screen from their partner

-The deaf and hard-of-hearing community prefer text communication to calls

-Women typically call in the early morning or evenings when they are not working

Chalmers 🤖

-Chalmers can describe a category of abuse BEFORE women reach the helpline

-The chatbot can attract younger women to seek help for the first time instead of going straight to the call line

-Women who do not want their partners to hear their calls can use the chatbot to quietly get information

-The deaf and hard-of-hearing community can use the chatbot for text-based assistance

-Chalmers is available 24/7

Once I understood the limitations and goals for this project, I began user research

(with the help of Ph.D. pros)

🔍🔍🔍

Who are the core users?

…and what are my assumptions?

Lower Income

The chatbot should mention financial violence as women’s economic status likely affects ability to leave

Younger Women

The chatbot should use everyday and accessible language

Women of Color

The chatbot should be ethically and racially sensitive

Great! Now, what are the business needs?

This is where I mapped out “north star” goals for the startup. This helped me ground the product.

Educate the user on the different types of domestic violence

Encourage free answer for natural language processing scalability

Refer the user to a hotline

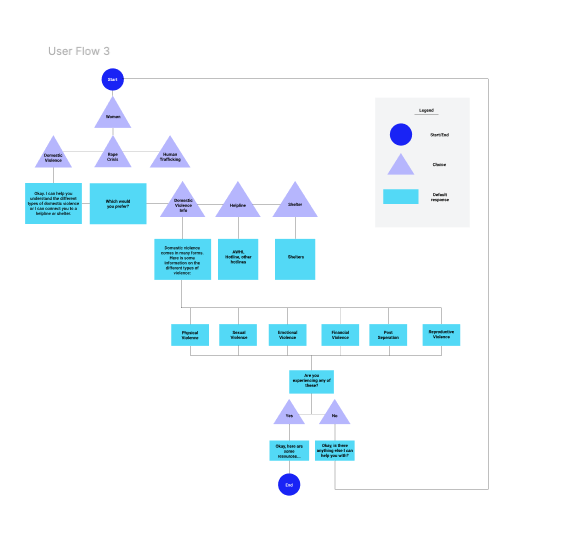

Once I understood the user and business needs, I created TASK FLOWS for the abuse categories.*

*Why? Fulfillment is easy (provide number to AWHL).

Figuring out HOW to get there while ethically collecting data is difficult.

👇🏾👇🏾👇🏾

I thought of this flow as a series of “IF” statements…

This helped me understand every possible interaction within the categories.

It was important to see how dense the interaction would get when depending on utterances vs slots!

•IF onboarding is completed, bot shows crisis, food, things to do, clothing and shelter categ.

•IF user selects ”crisis”, bot shows general, youth, women, LGBTQ, and mental health categ.

•IF user selects “women”, bot shows dv, sexual crisis, and human trafficking categ.

•IF user selects “domestic violence”, bot lists types of violence and shows “Im not sure” categ.

•IF user selects type of violence, bot provides hotline

•IF user selects “I’m not sure”, bot asks user to provide description (NLP)

I then created a WORKABLE PROTOTYPE

to test with 10 users:

-Task Success Rate: How many users successfully navigate from the introductory message to the AWHL referral number in 2 minutes

-System Usability Score: What average usability score do users give the interaction on a scale of 1-10?

Here was my hypothesis:

👇🏾👇🏾👇🏾

Here are the results of my usability tests:

KEY TAKEAWAY:

Utterances are still a point of friction even when alternating between utterances or slots or rephrasing the copy.

To figure out if there was a way to mitigate the continued drop-off by familiarizing the users with utterances, I led design sessions with the same 10 users!

🧠 Design Sessions 🧠

This is where a CODESIGN (collaborative design) session is vital. I led 10 sessions with 2 AWHL Professionals and 10 different women who have recently experienced domestic violence to better understand how to train our bot.

In my design sessions, I led activities like USER STORIES, AFFINITY MAPPING, and CATEGORY SORTING via zoom with the same users that tested my first prototype. I used the same set of users to better understand their motivations when they navigated the prototype.

Here are some of the problems with my initial design

(according to our users)

•Assumes everyone knows different types of violence

•The number of options is overwhelming

•Needs more detailed information on violence

Here’s how I took their feedback

👇🏾👇🏾👇🏾

•Described types of violence

•Cut down options and relied on slots to connect user to AWHL

The domestic violence flow I created has a 90% task success rate (from intro message to referral number).

It has a 8/10 system usability score (on average, users rate it 8/10 usable).

This interaction is now live check it out: https://chalmers.amplelabs.co

🎊 This brand new feature allows users to find domestic violence services for the 1ST TIME 🎊

Though this new flow allows users to connect to AWHL safely, we wanted to encourage users to use utterances in the future.

This would allow the team to collect important data about users.

To encourage users to type rather than blindsiding them with a prompt, I worked with a lead engineer to create hidden utterances.

The next few sections will talk about that

👇🏿👇🏿👇🏿

Here are some categories of words derived from the design session that helped train our bot (recognition).

We used these words to create a hidden violent utterance for the bot. This means that if a user types in any of these words, they will be referred to a hotline.

Physical

Words that indicate physical violence: “hit, slap, beat, kick”, etc.

Sexual

Words that indicate sexual violence/manipulation. This may be particularly triggering and hard for our bot to understand.

Technological

Words that indicate technological abuse, control over use and/or access to devices: “phone, computer, laptop, passwords”.

Emotional

Words that indicate emotional violence: how the women feel/self esteem. This may be particularly hard for our bot to understand.

Financial

Words that indicate financial abuse/manipulation: “money, cards, bank, paycheck”.

🧠 Think of it like this:

When a user types in words like “hit” or “attack”, the bot processes it and refers them to a hotline

*Please email me to see more how I implemented hidden utterances via Amazon Lex into this design!

Here is a little side project I had while finishing the aforementioned design.

This is in the early iteration stage and is not shipped.

How might we design a safe exit button for users to escape the chatbot interface?

👇🏾👇🏾👇🏾

I created a graphic to illustrate how a safe exit button would work on a mobile device.

If the user clicks the button, a new Google Search tab will open

As Lead Designer for this side project, I chose to focus on the mobile functionality of a safe exit feature rather than include a web version.

This is because most users use Chalmers via mobile device.

Here is the graphic I made to demonstrate why building a mobile safe exit is more useful than building one for the desktop interface.

Though creating a safe exit button for the mobile UI may seem simple, there are actually very important design considerations…

-requires user to know there is a safe exit feature

-requires user to know where the feature is

-safety exit button has to be highly visible

-needs to respond quickly

-must leave no trace or indicator that safe exit was selected for privacy

I translated that into this flow:

User anticipates danger > user selects safety exit button > Chalmers opens new tab

What’s next?

I am iterating on the safe exit button design to test with users.

Stay tuned!